Mortgage Brokers

Most if not all of my tiny readership will be surprised to know that retail financial services are one of my pet peeves. I worked in the industry for a couple of years and have a pretty good idea of how it works, and my wife does research in the area of persuasion (like most of her colleagues and

her mentor she comes at it from the angle of having been -- like most of us -- more often a victim than a perpetrator).

Anyways, according to the foremost expert on the subject (see link above), there are six basic principles of persuasion:

- Reciprocity: "even if you don't like it, you can keep the steak knives as our gift to you".

- Commitment and Consistency: "either you're for us, or you're against us"

- Social Proof: "are you missing the highest rated drama to come out this Fall?"

- Authority: "nine out of ten dentists agree"

- Liking: ok this is so obvious it doesn't need an example

- Scarcity: "this is a strictly limited offer, limit of five per customer"

Scarcity is listed last because, for some reason, it's the last one in the Wikipedia summary and I'm too lazy to

look up the order Dr. Cialdini chooses, but

manufactured scarcity is perhaps the most overused tactic because it's cheap (usually free) and extremely effective. This is why folks will buy stuff on sale that they don't have any conceivable use for.

Anyway, the reason I dredge up this sordid topic is that -- like many other Californian mortgage owners -- I've been looking at refinancing a home loan. Homeloans are, at heart, a very simple, low risk, high margin product. You buy some real estate worth X, but you have some amount Y, much less than X, you're able to pay. You borrow the difference (plus padding, P) X - Y + P, at an interest rate I (which is greater than the "dead safe investment" rate for, say, government bonds by a profit margin and risk assessment), and you offer the real estate as collateral.

So the basic principle is that if you can't pay, the lender gets the real estate which is virtually certain to be worth a lot more than the missing money, because real estate doesn't drop in value ... right?

Out of Whack

Unfortunately, homeloans have become a LOT more complicated because all the underlying assumptions prevented a lot of people from buying houses, especially in really insane places like California where house prices are completely out of whack.

How can house prices be "completely out of whack"? Surely the US is a "free market" country and the market must -- by definition -- be correctly gaging (I just discovered that Americans can't spell "gauging" either) the value of houses.

Well here's the way you can tell if property values are completely out of whack. If the rental income from a property is nowhere near the cost of renting the same amount of cash (i.e. a mortgage for the whole darn thing) then the property value is completely out of whack. Cash, you see, is a perfectly liquid asset. You don't have to pay special taxes just to have it (quite the contrary). You don't need to paint it. It doesn't get vandalized. You can easily exchange it for anything of equivalent value. A house, on the other hand, has many many annoying disadvantages including the preceding, as well as being highly illiquid and generally costing a lot of money (6% in commissions for starters) to convert into cash.

So on the one hand you have $500,000 in cash, which would cost at very minimum $30,000 a year to rent (in the form of a

secured loan, but on the other hand you have $500,000 "worth" of house, which would cost an absolute maximum of $20,000 a year to rent. Out. Of. Whack.

Needfully Complicated

If a house is clearly worth X, and someone wants to buy it, but can only front Y, but is probably able to pay off a loan for X - Y + P at an interest rate I that is safely above the interest rate a bank pays its depositors, then it's an obvious winning deal, and the resulting product is simple.

But if the house is not clearly worth X at all, someone wants to but it but maybe can't front any money at all, and maybe can't pay off a loan for X + P even at fake interest rate I - Q, and the lender isn't a bank with depositors, but a speculator with a bunch of juggled loans, IOUs, and chutzpah, then the product gets a lot more complicated.

That's bad enough. It's very bad that financially illiterate people have been royally screwed by a huge, byzantine, greedy, and surprisingly incompetent bunch of people trying to make quick money in seriously out of whack property markets, but the real problem is that the homeloan product has become ridiculously complicated for everyone and will probably never get fixed.

All I need is your social security number

When you talk to a mortgage broker (at least, a typical call center one -- the honest ones are quite different) you'll be asked a whole bunch of questions, typically:

Where is the property?

How much is it worth? (Rough guess is fine.)

How much do you owe? (Rough guess is fine.)

How much are you paying? (Rough guess is fine.)

What do you make? (Ballpark is fine.)

How's your credit? (Ballpark is fine.)

What is your social security number?Here's the rub. It's OK to guesstimate the amount of money involved, the amount to you owe, how much you're currently paying, and your income, but to get to the next step, they need your social? WTF?

They don't need your social security number at all -- not at this stage. Either they don't need any of the information they asked for (aside from the address of the property), or they don't trust you, or it is what it is: a commitment and consistency play. The next step, after they offer you a completely rubbery set of figures (assuming you gave them your social security number) will be to ask for a deposit which will be completely refundable at signing. The question is, will it be completely refundable if there is no signing?

Getting to Yes (or No)

The fact is that, underneath it all, a home loan that doesn't suck is still a very simple product. You're borrowing Y < X to buy (or continue "owning") a house worth X, and you promise to make the payments or hand over the house. There's no real risk for the lender (since X > Y, and X is very unlikely to drop) and the borrower knows exactly what he/she is signing up for. It follows that a mortgage broker who is dealing in mortgages that don't suck can easily tell whether he/she has a good deal for a prospective customer with just three figures: X, Y, and your credit rating. Based on this, they can either sketch out a very compelling offer, or tell you that they have nothing to offer you at this time.

If the broker needs a day to get back to you with figures, then basically they're figuring out how to sell you a home loan that seriously sucks.

Crunching the Numbers

So, you've given some random person your social security number and they've gone off to crunch the numbers. It will take at least half an hour. More often than not it will take overnight. It's obviously a lot of work, so either they're going to work like mad to get it done in half an hour (they're excited to be working with you) or they'll get back to you in a day (because it's so much work).

In fact, everything they're doing is canned and takes no more than entering a few figures in a standard spreadsheet that sits on their server. It could be even easier, but (a) the companies they work for are spectacularly incompetent with technology (seriously, like you wouldn't believe), (b) the folks selling these products probably can't multiply, and (c) making it easier is not helpful to the sales process; it's already way too easy.

We're talking

reciprocity here. They can't just tell you what your situation is instantly because then they wouldn't have done this huge favor for you, their special friend, for absolutely FREE. No obligation!

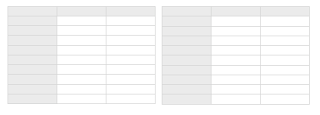

In fact, if you look at

this spreadsheet you'll see that it's easy to calculate a "mortage payment per $100,000 of principal" for any given interest rate, and so all you need to know is the amount and the interest rate and you know exactly what the deal looks like. At 6% a $100,000 30 year mortgage costs $600 per month. A $250,000 mortgage would therefore cost you ... look I'll get back to you in thirty minutes after I crunch some numbers.

The Hard Sell

Well, we've crunched those numbers, just for you (reciprocity). It was hard, complicated work, and we've got a 20 page printout to prove it was hard. Can we fax it to you? We don't actually have email here (or direct phone lines) even though we're a big established company with a fabulous website (authority).

The deal is insanely worse than you were expecting (this is

bait and switch which is what you do after playing

commitment and consistency), but -- and here's the good news -- it will "save" you a lot of money. Really. The monthly payment will be lower. Really.

And now's the best time to take the deal, because:

The price of real estate is rising (if you're buying) OR

The price of real estate is falling (if you're refinancing) OR

Interest rates are rising OR

Interest rates are about to rise* OR

This is a special deal, just for you for [insert random excuse]

* I've been hearing this one consistently for the last year, and they persist with this obvious baloney even when I quote

The Economist. Not only are (as of writing) interest rates consistently falling, but the "R-word" is being bandied about.

The last is the Hail Mary

scarcity tactic. If you hear that, you know the person you're talking to is running on empty. This is

fear incarnate you're hearing.

That's it. You can tell a good homeloan from a bad one because it's simple. A bad one is complex. That's it. OK, even the simple version seems complex ... how can you tell? Well, if the simple inputs into the equation: how much you owe (before the loan and after) or how much you're paying (now and in the future) are complicated, it's not simple any more ... and either you're a financial whiz finessing tax loopholes (in which case you don't need my help) or someone is screwing you.

Final Note: Some High School Math

You probably don't know how to calculate compound interest payments. Just figuring out how to use Excel's formulae is pretty nasty. The underlying math is very simple.

Let's suppose you're depositing $1 per month into an account that accrues monthly compounding interest of I%. The amount of money you accrue is a geometric series, i.e. after N months:

$1 (today's deposit) + $1 * (1 + I) ^ 1 + $1 * (1 + I) ^ 2 + ... $1 + (1 + I) ^ (N - 1)

OR

1 + 1 * R + 1 * R^2 + ... + 1 * R^(N-1) (where R = 1 + I)

There's a neat formula for calculating this (which I learned in High School; your mileage may vary):

S = ( 1 - R ^ N ) / ( 1 - R )

You may have seen this with an "A" out the front, but I've simplified this by assuming the initial term is 1.

Now this tells us how much we'll have "paid back" at an interest rate of I with payments of $1 over N months.

We can work out how much we owe as: T = P * (1 + I) ^ N. (This is the principle -- i.e. loan amount -- compounded at the same rate for N months.)

So, to find out the monthly payment, divide this result by the earlier sum:

Payment = T / S.

You can quickly verify this formula on your mortgage with one more piece of information: in the US lenders are legally allowed to misrepresent interest rates. In many other countries, lenders must either give you an APR ("Annual Percentage Rate" which is to say "Interest Rate when I don't flat out lie"), but in the US they're allowed to say 12%, when in fact they mean 1% compounded monthly, which is significantly more (12.7%).

That's it.